Visualizing and Comparing LIS Output¶

LIS Output Primer¶

LIS writes model state variables to disk at a frequency selected by the user (e.g., 6-hourly, daily, monthly). The LIS output we will be exploring was originally generated as daily NetCDF files, meaning one NetCDF was written per simulated day. We have converted these NetCDF files into a Zarr store for improved performance in the cloud.

Import Libraries¶

# interface to Amazon S3 filesystem

import s3fs

# interact with n-d arrays

import numpy as np

import xarray as xr

# interact with tabular data (incl. spatial)

import pandas as pd

import geopandas as gpd

# interactive plots

import holoviews as hv

import geoviews as gv

import hvplot.pandas

import hvplot.xarray

# used to find nearest grid cell to a given location

from scipy.spatial import distance

# set bokeh as the holoviews plotting backend

hv.extension('bokeh')

Load the LIS Output¶

The xarray library makes working with labelled n-dimensional arrays easy and efficient. If you’re familiar with the pandas library it should feel pretty familiar.

Here we load the LIS output into an xarray.Dataset object:

# create S3 filesystem object

s3 = s3fs.S3FileSystem(anon=False)

# define the name of our S3 bucket

bucket_name = 'eis-dh-hydro/SNOWEX-HACKWEEK'

# define path to store on S3

lis_output_s3_path = f's3://{bucket_name}/DA_SNODAS/SURFACEMODEL/LIS_HIST.d01.zarr/'

# create key-value mapper for S3 object (required to read data stored on S3)

lis_output_mapper = s3.get_mapper(lis_output_s3_path)

# open the dataset

lis_output_ds = xr.open_zarr(lis_output_mapper, consolidated=True)

# drop some unneeded variables

lis_output_ds = lis_output_ds.drop_vars(['_history', '_eis_source_path'])

Explore the Data¶

Display an interactive widget for inspecting the dataset by running a cell containing the variable name. Expand the dropdown menus and click on the document and database icons to inspect the variables and attributes.

lis_output_ds

<xarray.Dataset>

Dimensions: (time: 730, north_south: 215, east_west: 361, SoilMoist_profiles: 4)

Coordinates:

* time (time) datetime64[ns] 2016-10-01 2016-10-02 ... 2018-09-30

Dimensions without coordinates: north_south, east_west, SoilMoist_profiles

Data variables: (12/26)

Albedo_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

CanopInt_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

ECanop_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

ESoil_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

GPP_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

LAI_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

... ...

Swnet_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TVeg_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TWS_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TotalPrecip_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

lat (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

lon (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

Attributes: (12/14)

DX: 0.10000000149011612

DY: 0.10000000149011612

MAP_PROJECTION: EQUIDISTANT CYLINDRICAL

NUM_SOIL_LAYERS: 4

SOIL_LAYER_THICKNESSES: [10.0, 30.000001907348633, 60.000003814697266, 1...

SOUTH_WEST_CORNER_LAT: 28.549999237060547

... ...

conventions: CF-1.6

institution: NASA GSFC

missing_value: -9999.0

references: Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

source: Noah-MP.4.0.1

title: LIS land surface model output- time: 730

- north_south: 215

- east_west: 361

- SoilMoist_profiles: 4

- time(time)datetime64[ns]2016-10-01 ... 2018-09-30

- begin_date :

- 20161001

- begin_time :

- 000000

- long_name :

- time

- time_increment :

- 86400

array(['2016-10-01T00:00:00.000000000', '2016-10-02T00:00:00.000000000', '2016-10-03T00:00:00.000000000', ..., '2018-09-28T00:00:00.000000000', '2018-09-29T00:00:00.000000000', '2018-09-30T00:00:00.000000000'], dtype='datetime64[ns]')

- Albedo_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface albedo

- standard_name :

- surface_albedo

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - CanopInt_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- total canopy water storage

- standard_name :

- total_canopy_water_storage

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - ECanop_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- interception evaporation

- standard_name :

- interception_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - ESoil_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- bare soil evaporation

- standard_name :

- bare_soil_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - GPP_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- gross primary production

- standard_name :

- gross_primary_production

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - LAI_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- leaf area index

- standard_name :

- leaf_area_index

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - LWdown_f_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface downward longwave radiation

- standard_name :

- surface_downwelling_longwave_flux_in_air

- units :

- W m-2

- vmax :

- 750.0

- vmin :

- 0.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - Lwnet_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- net downward longwave radiation

- standard_name :

- surface_net_downward_longwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - NEE_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- net ecosystem exchange

- standard_name :

- net_ecosystem_exchange

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - Qg_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- soil heat flux

- standard_name :

- downward_heat_flux_in_soil

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - Qh_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- sensible heat flux

- standard_name :

- surface_upward_sensible_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - Qle_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- latent heat flux

- standard_name :

- surface_upward_latent_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - Qs_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface runoff

- standard_name :

- surface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - Qsb_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- subsurface runoff amount

- standard_name :

- subsurface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - RadT_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface radiative temperature

- standard_name :

- surface_radiative_temperature

- units :

- K

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - SWE_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- snow water equivalent

- standard_name :

- liquid_water_content_of_surface_snow

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - SWdown_f_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface downward shortwave radiation

- standard_name :

- surface_downwelling_shortwave_flux_in_air

- units :

- W m-2

- vmax :

- 1360.0

- vmin :

- 0.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - SnowDepth_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- snow depth

- standard_name :

- snow_depth

- units :

- m

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - Snowcover_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- snow cover

- standard_name :

- surface_snow_area_fraction

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - SoilMoist_tavg(time, SoilMoist_profiles, north_south, east_west)float32dask.array<chunksize=(1, 4, 215, 361), meta=np.ndarray>

- long_name :

- soil moisture content

- standard_name :

- soil_moisture_content

- units :

- m^3 m-3

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 864.55 MiB 1.18 MiB Shape (730, 4, 215, 361) (1, 4, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - Swnet_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- net downward shortwave radiation

- standard_name :

- surface_net_downward_shortwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - TVeg_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- vegetation transpiration

- standard_name :

- vegetation_transpiration

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - TWS_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- terrestrial water storage

- standard_name :

- terrestrial_water_storage

- units :

- mm

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - TotalPrecip_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- total precipitation amount

- standard_name :

- total_precipitation_amount

- units :

- kg m-2 s-1

- vmax :

- 0.019999999552965164

- vmin :

- 0.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - lat(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- latitude

- standard_name :

- latitude

- units :

- degree_north

- vmax :

- 0.0

- vmin :

- 0.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - lon(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degree_east

- vmax :

- 0.0

- vmin :

- 0.0

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray

- DX :

- 0.10000000149011612

- DY :

- 0.10000000149011612

- MAP_PROJECTION :

- EQUIDISTANT CYLINDRICAL

- NUM_SOIL_LAYERS :

- 4

- SOIL_LAYER_THICKNESSES :

- [10.0, 30.000001907348633, 60.000003814697266, 100.0]

- SOUTH_WEST_CORNER_LAT :

- 28.549999237060547

- SOUTH_WEST_CORNER_LON :

- -113.94999694824219

- comment :

- website: http://lis.gsfc.nasa.gov/

- conventions :

- CF-1.6

- institution :

- NASA GSFC

- missing_value :

- -9999.0

- references :

- Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

- source :

- Noah-MP.4.0.1

- title :

- LIS land surface model output

Accessing Attributes¶

Dataset attributes (metadata) are accessible via the attrs attribute:

lis_output_ds.attrs

{'DX': 0.10000000149011612,

'DY': 0.10000000149011612,

'MAP_PROJECTION': 'EQUIDISTANT CYLINDRICAL',

'NUM_SOIL_LAYERS': 4,

'SOIL_LAYER_THICKNESSES': [10.0,

30.000001907348633,

60.000003814697266,

100.0],

'SOUTH_WEST_CORNER_LAT': 28.549999237060547,

'SOUTH_WEST_CORNER_LON': -113.94999694824219,

'comment': 'website: http://lis.gsfc.nasa.gov/',

'conventions': 'CF-1.6',

'institution': 'NASA GSFC',

'missing_value': -9999.0,

'references': 'Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007',

'source': 'Noah-MP.4.0.1',

'title': 'LIS land surface model output'}

Accessing Variables¶

Variables can be accessed using either dot notation or square bracket notation:

# dot notation

lis_output_ds.SnowDepth_tavg

<xarray.DataArray 'SnowDepth_tavg' (time: 730, north_south: 215, east_west: 361)>

dask.array<open_dataset-7d66e42249419f6b85d6bd66542e643aSnowDepth_tavg, shape=(730, 215, 361), dtype=float32, chunksize=(1, 215, 361), chunktype=numpy.ndarray>

Coordinates:

* time (time) datetime64[ns] 2016-10-01 2016-10-02 ... 2018-09-30

Dimensions without coordinates: north_south, east_west

Attributes:

long_name: snow depth

standard_name: snow_depth

units: m

vmax: 999999986991104.0

vmin: -999999986991104.0- time: 730

- north_south: 215

- east_west: 361

- dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - time(time)datetime64[ns]2016-10-01 ... 2018-09-30

- begin_date :

- 20161001

- begin_time :

- 000000

- long_name :

- time

- time_increment :

- 86400

array(['2016-10-01T00:00:00.000000000', '2016-10-02T00:00:00.000000000', '2016-10-03T00:00:00.000000000', ..., '2018-09-28T00:00:00.000000000', '2018-09-29T00:00:00.000000000', '2018-09-30T00:00:00.000000000'], dtype='datetime64[ns]')

- long_name :

- snow depth

- standard_name :

- snow_depth

- units :

- m

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

# square bracket notation

lis_output_ds['SnowDepth_tavg']

<xarray.DataArray 'SnowDepth_tavg' (time: 730, north_south: 215, east_west: 361)>

dask.array<open_dataset-7d66e42249419f6b85d6bd66542e643aSnowDepth_tavg, shape=(730, 215, 361), dtype=float32, chunksize=(1, 215, 361), chunktype=numpy.ndarray>

Coordinates:

* time (time) datetime64[ns] 2016-10-01 2016-10-02 ... 2018-09-30

Dimensions without coordinates: north_south, east_west

Attributes:

long_name: snow depth

standard_name: snow_depth

units: m

vmax: 999999986991104.0

vmin: -999999986991104.0- time: 730

- north_south: 215

- east_west: 361

- dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - time(time)datetime64[ns]2016-10-01 ... 2018-09-30

- begin_date :

- 20161001

- begin_time :

- 000000

- long_name :

- time

- time_increment :

- 86400

array(['2016-10-01T00:00:00.000000000', '2016-10-02T00:00:00.000000000', '2016-10-03T00:00:00.000000000', ..., '2018-09-28T00:00:00.000000000', '2018-09-29T00:00:00.000000000', '2018-09-30T00:00:00.000000000'], dtype='datetime64[ns]')

- long_name :

- snow depth

- standard_name :

- snow_depth

- units :

- m

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Which syntax should I use?¶

While both syntaxes perform the same function, the square-bracket syntax is useful when interacting with a dataset programmatically. For example, we can define a variable varname that stores the name of the variable in the dataset we want to access and then use that with the square-brackets notation:

varname = 'SnowDepth_tavg'

lis_output_ds[varname]

<xarray.DataArray 'SnowDepth_tavg' (time: 730, north_south: 215, east_west: 361)>

dask.array<open_dataset-7d66e42249419f6b85d6bd66542e643aSnowDepth_tavg, shape=(730, 215, 361), dtype=float32, chunksize=(1, 215, 361), chunktype=numpy.ndarray>

Coordinates:

* time (time) datetime64[ns] 2016-10-01 2016-10-02 ... 2018-09-30

Dimensions without coordinates: north_south, east_west

Attributes:

long_name: snow depth

standard_name: snow_depth

units: m

vmax: 999999986991104.0

vmin: -999999986991104.0- time: 730

- north_south: 215

- east_west: 361

- dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

Array Chunk Bytes 216.14 MiB 303.18 kiB Shape (730, 215, 361) (1, 215, 361) Count 731 Tasks 730 Chunks Type float32 numpy.ndarray - time(time)datetime64[ns]2016-10-01 ... 2018-09-30

- begin_date :

- 20161001

- begin_time :

- 000000

- long_name :

- time

- time_increment :

- 86400

array(['2016-10-01T00:00:00.000000000', '2016-10-02T00:00:00.000000000', '2016-10-03T00:00:00.000000000', ..., '2018-09-28T00:00:00.000000000', '2018-09-29T00:00:00.000000000', '2018-09-30T00:00:00.000000000'], dtype='datetime64[ns]')

- long_name :

- snow depth

- standard_name :

- snow_depth

- units :

- m

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

The dot notation syntax will not work this way because xarray tries to find a variable in the dataset named varname instead of the value of the varname variable. When xarray can’t find this variable, it throws an error:

# uncomment and run the code below to see the error

# varname = 'SnowDepth_tavg'

# lis_output_ds.varname

Dimensions and Coordinate Variables¶

The dimensions and coordinate variable fields put the “labelled” in “labelled n-dimensional arrays”:

Dimensions: labels for each dimension in the dataset (e.g.,

time)Coordinates: labels for indexing along dimensions (e.g.,

'2019-01-01')

We can use these labels to select, slice, and aggregate the dataset.

Selecting/Subsetting¶

xarray provides two methods for selecting or subsetting along coordinate variables:

index selection:

ds.isel(time=0)value selection

ds.sel(time='2019-01-01')

For example, we can select the first timestep from our dataset using index selection by passing the dimension name as a keyword argument:

# remember: python indexes start at 0

lis_output_ds.isel(time=0)

<xarray.Dataset>

Dimensions: (north_south: 215, east_west: 361, SoilMoist_profiles: 4)

Coordinates:

time datetime64[ns] 2016-10-01

Dimensions without coordinates: north_south, east_west, SoilMoist_profiles

Data variables: (12/26)

Albedo_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

CanopInt_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

ECanop_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

ESoil_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

GPP_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

LAI_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

... ...

Swnet_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

TVeg_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

TWS_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

TotalPrecip_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

lat (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

lon (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

Attributes: (12/14)

DX: 0.10000000149011612

DY: 0.10000000149011612

MAP_PROJECTION: EQUIDISTANT CYLINDRICAL

NUM_SOIL_LAYERS: 4

SOIL_LAYER_THICKNESSES: [10.0, 30.000001907348633, 60.000003814697266, 1...

SOUTH_WEST_CORNER_LAT: 28.549999237060547

... ...

conventions: CF-1.6

institution: NASA GSFC

missing_value: -9999.0

references: Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

source: Noah-MP.4.0.1

title: LIS land surface model output- north_south: 215

- east_west: 361

- SoilMoist_profiles: 4

- time()datetime64[ns]2016-10-01

- begin_date :

- 20161001

- begin_time :

- 000000

- long_name :

- time

- time_increment :

- 86400

array('2016-10-01T00:00:00.000000000', dtype='datetime64[ns]')

- Albedo_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface albedo

- standard_name :

- surface_albedo

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - CanopInt_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- total canopy water storage

- standard_name :

- total_canopy_water_storage

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - ECanop_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- interception evaporation

- standard_name :

- interception_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - ESoil_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- bare soil evaporation

- standard_name :

- bare_soil_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - GPP_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- gross primary production

- standard_name :

- gross_primary_production

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - LAI_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- leaf area index

- standard_name :

- leaf_area_index

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - LWdown_f_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface downward longwave radiation

- standard_name :

- surface_downwelling_longwave_flux_in_air

- units :

- W m-2

- vmax :

- 750.0

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Lwnet_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- net downward longwave radiation

- standard_name :

- surface_net_downward_longwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - NEE_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- net ecosystem exchange

- standard_name :

- net_ecosystem_exchange

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qg_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- soil heat flux

- standard_name :

- downward_heat_flux_in_soil

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qh_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- sensible heat flux

- standard_name :

- surface_upward_sensible_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qle_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- latent heat flux

- standard_name :

- surface_upward_latent_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qs_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface runoff

- standard_name :

- surface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qsb_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- subsurface runoff amount

- standard_name :

- subsurface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - RadT_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface radiative temperature

- standard_name :

- surface_radiative_temperature

- units :

- K

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - SWE_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- snow water equivalent

- standard_name :

- liquid_water_content_of_surface_snow

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - SWdown_f_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface downward shortwave radiation

- standard_name :

- surface_downwelling_shortwave_flux_in_air

- units :

- W m-2

- vmax :

- 1360.0

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - SnowDepth_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- snow depth

- standard_name :

- snow_depth

- units :

- m

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Snowcover_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- snow cover

- standard_name :

- surface_snow_area_fraction

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - SoilMoist_tavg(SoilMoist_profiles, north_south, east_west)float32dask.array<chunksize=(4, 215, 361), meta=np.ndarray>

- long_name :

- soil moisture content

- standard_name :

- soil_moisture_content

- units :

- m^3 m-3

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 1.18 MiB 1.18 MiB Shape (4, 215, 361) (4, 215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Swnet_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- net downward shortwave radiation

- standard_name :

- surface_net_downward_shortwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - TVeg_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- vegetation transpiration

- standard_name :

- vegetation_transpiration

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - TWS_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- terrestrial water storage

- standard_name :

- terrestrial_water_storage

- units :

- mm

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - TotalPrecip_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- total precipitation amount

- standard_name :

- total_precipitation_amount

- units :

- kg m-2 s-1

- vmax :

- 0.019999999552965164

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - lat(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- latitude

- standard_name :

- latitude

- units :

- degree_north

- vmax :

- 0.0

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - lon(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degree_east

- vmax :

- 0.0

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray

- DX :

- 0.10000000149011612

- DY :

- 0.10000000149011612

- MAP_PROJECTION :

- EQUIDISTANT CYLINDRICAL

- NUM_SOIL_LAYERS :

- 4

- SOIL_LAYER_THICKNESSES :

- [10.0, 30.000001907348633, 60.000003814697266, 100.0]

- SOUTH_WEST_CORNER_LAT :

- 28.549999237060547

- SOUTH_WEST_CORNER_LON :

- -113.94999694824219

- comment :

- website: http://lis.gsfc.nasa.gov/

- conventions :

- CF-1.6

- institution :

- NASA GSFC

- missing_value :

- -9999.0

- references :

- Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

- source :

- Noah-MP.4.0.1

- title :

- LIS land surface model output

Or we can use value selection to select based on the coordinate(s) (think “labels”) of a given dimension:

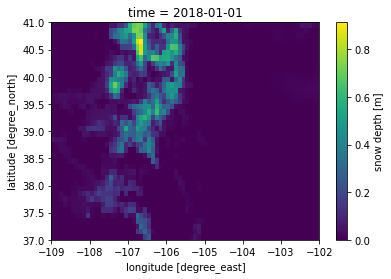

lis_output_ds.sel(time='2018-01-01')

<xarray.Dataset>

Dimensions: (north_south: 215, east_west: 361, SoilMoist_profiles: 4)

Coordinates:

time datetime64[ns] 2018-01-01

Dimensions without coordinates: north_south, east_west, SoilMoist_profiles

Data variables: (12/26)

Albedo_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

CanopInt_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

ECanop_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

ESoil_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

GPP_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

LAI_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

... ...

Swnet_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

TVeg_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

TWS_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

TotalPrecip_tavg (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

lat (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

lon (north_south, east_west) float32 dask.array<chunksize=(215, 361), meta=np.ndarray>

Attributes: (12/14)

DX: 0.10000000149011612

DY: 0.10000000149011612

MAP_PROJECTION: EQUIDISTANT CYLINDRICAL

NUM_SOIL_LAYERS: 4

SOIL_LAYER_THICKNESSES: [10.0, 30.000001907348633, 60.000003814697266, 1...

SOUTH_WEST_CORNER_LAT: 28.549999237060547

... ...

conventions: CF-1.6

institution: NASA GSFC

missing_value: -9999.0

references: Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

source: Noah-MP.4.0.1

title: LIS land surface model output- north_south: 215

- east_west: 361

- SoilMoist_profiles: 4

- time()datetime64[ns]2018-01-01

- begin_date :

- 20161001

- begin_time :

- 000000

- long_name :

- time

- time_increment :

- 86400

array('2018-01-01T00:00:00.000000000', dtype='datetime64[ns]')

- Albedo_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface albedo

- standard_name :

- surface_albedo

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - CanopInt_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- total canopy water storage

- standard_name :

- total_canopy_water_storage

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - ECanop_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- interception evaporation

- standard_name :

- interception_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - ESoil_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- bare soil evaporation

- standard_name :

- bare_soil_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - GPP_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- gross primary production

- standard_name :

- gross_primary_production

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - LAI_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- leaf area index

- standard_name :

- leaf_area_index

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - LWdown_f_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface downward longwave radiation

- standard_name :

- surface_downwelling_longwave_flux_in_air

- units :

- W m-2

- vmax :

- 750.0

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Lwnet_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- net downward longwave radiation

- standard_name :

- surface_net_downward_longwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - NEE_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- net ecosystem exchange

- standard_name :

- net_ecosystem_exchange

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qg_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- soil heat flux

- standard_name :

- downward_heat_flux_in_soil

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qh_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- sensible heat flux

- standard_name :

- surface_upward_sensible_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qle_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- latent heat flux

- standard_name :

- surface_upward_latent_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qs_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface runoff

- standard_name :

- surface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Qsb_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- subsurface runoff amount

- standard_name :

- subsurface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - RadT_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface radiative temperature

- standard_name :

- surface_radiative_temperature

- units :

- K

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - SWE_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- snow water equivalent

- standard_name :

- liquid_water_content_of_surface_snow

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - SWdown_f_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- surface downward shortwave radiation

- standard_name :

- surface_downwelling_shortwave_flux_in_air

- units :

- W m-2

- vmax :

- 1360.0

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - SnowDepth_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- snow depth

- standard_name :

- snow_depth

- units :

- m

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Snowcover_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- snow cover

- standard_name :

- surface_snow_area_fraction

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - SoilMoist_tavg(SoilMoist_profiles, north_south, east_west)float32dask.array<chunksize=(4, 215, 361), meta=np.ndarray>

- long_name :

- soil moisture content

- standard_name :

- soil_moisture_content

- units :

- m^3 m-3

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 1.18 MiB 1.18 MiB Shape (4, 215, 361) (4, 215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - Swnet_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- net downward shortwave radiation

- standard_name :

- surface_net_downward_shortwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - TVeg_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- vegetation transpiration

- standard_name :

- vegetation_transpiration

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - TWS_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- terrestrial water storage

- standard_name :

- terrestrial_water_storage

- units :

- mm

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - TotalPrecip_tavg(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- total precipitation amount

- standard_name :

- total_precipitation_amount

- units :

- kg m-2 s-1

- vmax :

- 0.019999999552965164

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - lat(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- latitude

- standard_name :

- latitude

- units :

- degree_north

- vmax :

- 0.0

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray - lon(north_south, east_west)float32dask.array<chunksize=(215, 361), meta=np.ndarray>

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degree_east

- vmax :

- 0.0

- vmin :

- 0.0

Array Chunk Bytes 303.18 kiB 303.18 kiB Shape (215, 361) (215, 361) Count 732 Tasks 1 Chunks Type float32 numpy.ndarray

- DX :

- 0.10000000149011612

- DY :

- 0.10000000149011612

- MAP_PROJECTION :

- EQUIDISTANT CYLINDRICAL

- NUM_SOIL_LAYERS :

- 4

- SOIL_LAYER_THICKNESSES :

- [10.0, 30.000001907348633, 60.000003814697266, 100.0]

- SOUTH_WEST_CORNER_LAT :

- 28.549999237060547

- SOUTH_WEST_CORNER_LON :

- -113.94999694824219

- comment :

- website: http://lis.gsfc.nasa.gov/

- conventions :

- CF-1.6

- institution :

- NASA GSFC

- missing_value :

- -9999.0

- references :

- Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

- source :

- Noah-MP.4.0.1

- title :

- LIS land surface model output

The .sel() approach also allows the use of shortcuts in some cases. For example, here we select all timesteps in the month of January 2018:

lis_output_ds.sel(time='2018-01')

<xarray.Dataset>

Dimensions: (time: 31, north_south: 215, east_west: 361, SoilMoist_profiles: 4)

Coordinates:

* time (time) datetime64[ns] 2018-01-01 2018-01-02 ... 2018-01-31

Dimensions without coordinates: north_south, east_west, SoilMoist_profiles

Data variables: (12/26)

Albedo_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

CanopInt_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

ECanop_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

ESoil_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

GPP_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

LAI_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

... ...

Swnet_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TVeg_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TWS_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TotalPrecip_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

lat (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

lon (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

Attributes: (12/14)

DX: 0.10000000149011612

DY: 0.10000000149011612

MAP_PROJECTION: EQUIDISTANT CYLINDRICAL

NUM_SOIL_LAYERS: 4

SOIL_LAYER_THICKNESSES: [10.0, 30.000001907348633, 60.000003814697266, 1...

SOUTH_WEST_CORNER_LAT: 28.549999237060547

... ...

conventions: CF-1.6

institution: NASA GSFC

missing_value: -9999.0

references: Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

source: Noah-MP.4.0.1

title: LIS land surface model output- time: 31

- north_south: 215

- east_west: 361

- SoilMoist_profiles: 4

- time(time)datetime64[ns]2018-01-01 ... 2018-01-31

- begin_date :

- 20161001

- begin_time :

- 000000

- long_name :

- time

- time_increment :

- 86400

array(['2018-01-01T00:00:00.000000000', '2018-01-02T00:00:00.000000000', '2018-01-03T00:00:00.000000000', '2018-01-04T00:00:00.000000000', '2018-01-05T00:00:00.000000000', '2018-01-06T00:00:00.000000000', '2018-01-07T00:00:00.000000000', '2018-01-08T00:00:00.000000000', '2018-01-09T00:00:00.000000000', '2018-01-10T00:00:00.000000000', '2018-01-11T00:00:00.000000000', '2018-01-12T00:00:00.000000000', '2018-01-13T00:00:00.000000000', '2018-01-14T00:00:00.000000000', '2018-01-15T00:00:00.000000000', '2018-01-16T00:00:00.000000000', '2018-01-17T00:00:00.000000000', '2018-01-18T00:00:00.000000000', '2018-01-19T00:00:00.000000000', '2018-01-20T00:00:00.000000000', '2018-01-21T00:00:00.000000000', '2018-01-22T00:00:00.000000000', '2018-01-23T00:00:00.000000000', '2018-01-24T00:00:00.000000000', '2018-01-25T00:00:00.000000000', '2018-01-26T00:00:00.000000000', '2018-01-27T00:00:00.000000000', '2018-01-28T00:00:00.000000000', '2018-01-29T00:00:00.000000000', '2018-01-30T00:00:00.000000000', '2018-01-31T00:00:00.000000000'], dtype='datetime64[ns]')

- Albedo_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface albedo

- standard_name :

- surface_albedo

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - CanopInt_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- total canopy water storage

- standard_name :

- total_canopy_water_storage

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - ECanop_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- interception evaporation

- standard_name :

- interception_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - ESoil_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- bare soil evaporation

- standard_name :

- bare_soil_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - GPP_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- gross primary production

- standard_name :

- gross_primary_production

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - LAI_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- leaf area index

- standard_name :

- leaf_area_index

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - LWdown_f_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface downward longwave radiation

- standard_name :

- surface_downwelling_longwave_flux_in_air

- units :

- W m-2

- vmax :

- 750.0

- vmin :

- 0.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - Lwnet_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- net downward longwave radiation

- standard_name :

- surface_net_downward_longwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - NEE_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- net ecosystem exchange

- standard_name :

- net_ecosystem_exchange

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - Qg_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- soil heat flux

- standard_name :

- downward_heat_flux_in_soil

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - Qh_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- sensible heat flux

- standard_name :

- surface_upward_sensible_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - Qle_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- latent heat flux

- standard_name :

- surface_upward_latent_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - Qs_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface runoff

- standard_name :

- surface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - Qsb_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- subsurface runoff amount

- standard_name :

- subsurface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - RadT_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface radiative temperature

- standard_name :

- surface_radiative_temperature

- units :

- K

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - SWE_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- snow water equivalent

- standard_name :

- liquid_water_content_of_surface_snow

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - SWdown_f_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface downward shortwave radiation

- standard_name :

- surface_downwelling_shortwave_flux_in_air

- units :

- W m-2

- vmax :

- 1360.0

- vmin :

- 0.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - SnowDepth_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- snow depth

- standard_name :

- snow_depth

- units :

- m

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - Snowcover_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- snow cover

- standard_name :

- surface_snow_area_fraction

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - SoilMoist_tavg(time, SoilMoist_profiles, north_south, east_west)float32dask.array<chunksize=(1, 4, 215, 361), meta=np.ndarray>

- long_name :

- soil moisture content

- standard_name :

- soil_moisture_content

- units :

- m^3 m-3

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 36.71 MiB 1.18 MiB Shape (31, 4, 215, 361) (1, 4, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - Swnet_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- net downward shortwave radiation

- standard_name :

- surface_net_downward_shortwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - TVeg_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- vegetation transpiration

- standard_name :

- vegetation_transpiration

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - TWS_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- terrestrial water storage

- standard_name :

- terrestrial_water_storage

- units :

- mm

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - TotalPrecip_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- total precipitation amount

- standard_name :

- total_precipitation_amount

- units :

- kg m-2 s-1

- vmax :

- 0.019999999552965164

- vmin :

- 0.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - lat(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- latitude

- standard_name :

- latitude

- units :

- degree_north

- vmax :

- 0.0

- vmin :

- 0.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray - lon(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degree_east

- vmax :

- 0.0

- vmin :

- 0.0

Array Chunk Bytes 9.18 MiB 303.18 kiB Shape (31, 215, 361) (1, 215, 361) Count 762 Tasks 31 Chunks Type float32 numpy.ndarray

- DX :

- 0.10000000149011612

- DY :

- 0.10000000149011612

- MAP_PROJECTION :

- EQUIDISTANT CYLINDRICAL

- NUM_SOIL_LAYERS :

- 4

- SOIL_LAYER_THICKNESSES :

- [10.0, 30.000001907348633, 60.000003814697266, 100.0]

- SOUTH_WEST_CORNER_LAT :

- 28.549999237060547

- SOUTH_WEST_CORNER_LON :

- -113.94999694824219

- comment :

- website: http://lis.gsfc.nasa.gov/

- conventions :

- CF-1.6

- institution :

- NASA GSFC

- missing_value :

- -9999.0

- references :

- Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

- source :

- Noah-MP.4.0.1

- title :

- LIS land surface model output

Select a custom range of dates using Python’s built-in slice() method:

lis_output_ds.sel(time=slice('2018-01-01', '2018-01-15'))

<xarray.Dataset>

Dimensions: (time: 15, north_south: 215, east_west: 361, SoilMoist_profiles: 4)

Coordinates:

* time (time) datetime64[ns] 2018-01-01 2018-01-02 ... 2018-01-15

Dimensions without coordinates: north_south, east_west, SoilMoist_profiles

Data variables: (12/26)

Albedo_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

CanopInt_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

ECanop_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

ESoil_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

GPP_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

LAI_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

... ...

Swnet_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TVeg_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TWS_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

TotalPrecip_tavg (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

lat (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

lon (time, north_south, east_west) float32 dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

Attributes: (12/14)

DX: 0.10000000149011612

DY: 0.10000000149011612

MAP_PROJECTION: EQUIDISTANT CYLINDRICAL

NUM_SOIL_LAYERS: 4

SOIL_LAYER_THICKNESSES: [10.0, 30.000001907348633, 60.000003814697266, 1...

SOUTH_WEST_CORNER_LAT: 28.549999237060547

... ...

conventions: CF-1.6

institution: NASA GSFC

missing_value: -9999.0

references: Kumar_etal_EMS_2006, Peters-Lidard_etal_ISSE_2007

source: Noah-MP.4.0.1

title: LIS land surface model output- time: 15

- north_south: 215

- east_west: 361

- SoilMoist_profiles: 4

- time(time)datetime64[ns]2018-01-01 ... 2018-01-15

- begin_date :

- 20161001

- begin_time :

- 000000

- long_name :

- time

- time_increment :

- 86400

array(['2018-01-01T00:00:00.000000000', '2018-01-02T00:00:00.000000000', '2018-01-03T00:00:00.000000000', '2018-01-04T00:00:00.000000000', '2018-01-05T00:00:00.000000000', '2018-01-06T00:00:00.000000000', '2018-01-07T00:00:00.000000000', '2018-01-08T00:00:00.000000000', '2018-01-09T00:00:00.000000000', '2018-01-10T00:00:00.000000000', '2018-01-11T00:00:00.000000000', '2018-01-12T00:00:00.000000000', '2018-01-13T00:00:00.000000000', '2018-01-14T00:00:00.000000000', '2018-01-15T00:00:00.000000000'], dtype='datetime64[ns]')

- Albedo_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface albedo

- standard_name :

- surface_albedo

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - CanopInt_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- total canopy water storage

- standard_name :

- total_canopy_water_storage

- units :

- kg m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - ECanop_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- interception evaporation

- standard_name :

- interception_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - ESoil_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- bare soil evaporation

- standard_name :

- bare_soil_evaporation

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - GPP_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- gross primary production

- standard_name :

- gross_primary_production

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - LAI_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- leaf area index

- standard_name :

- leaf_area_index

- units :

- -

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - LWdown_f_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface downward longwave radiation

- standard_name :

- surface_downwelling_longwave_flux_in_air

- units :

- W m-2

- vmax :

- 750.0

- vmin :

- 0.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - Lwnet_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- net downward longwave radiation

- standard_name :

- surface_net_downward_longwave_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - NEE_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- net ecosystem exchange

- standard_name :

- net_ecosystem_exchange

- units :

- g m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - Qg_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- soil heat flux

- standard_name :

- downward_heat_flux_in_soil

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - Qh_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- sensible heat flux

- standard_name :

- surface_upward_sensible_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - Qle_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- latent heat flux

- standard_name :

- surface_upward_latent_heat_flux

- units :

- W m-2

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - Qs_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface runoff

- standard_name :

- surface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - Qsb_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- subsurface runoff amount

- standard_name :

- subsurface_runoff_amount

- units :

- kg m-2 s-1

- vmax :

- 999999986991104.0

- vmin :

- -999999986991104.0

Array Chunk Bytes 4.44 MiB 303.18 kiB Shape (15, 215, 361) (1, 215, 361) Count 746 Tasks 15 Chunks Type float32 numpy.ndarray - RadT_tavg(time, north_south, east_west)float32dask.array<chunksize=(1, 215, 361), meta=np.ndarray>

- long_name :

- surface radiative temperature

- standard_name :